Google AdSense crawls websites to display relevant ads to users. Sometimes, AdSense may encounter errors while crawling a website, which can impact the delivery of ads.

For publishers who focus on monetizing their ad inventory, it is a huge plus to know what AdSense crawlers are and how they can help you to fix issues.

Fixing these AdSense crawler errors will help Google’s crawlers better access your site so it can display more relevant ads based on content which we know can drastically improve your ad revenue.

So before we ramble on, let’s clarify that AdSense crawlers are different from other Google bots and are used to index website content to send appropriate ads.

More often than not, this type of crawler will access site URLs where the AdSense tags are already implemented, including pages that redirect. This is why regular website maintenance is imperative to avoid any issues with Google.

Google crawls websites automatically at any time while reports are usually updated on a weekly basis. You may find a variety of errors when checking the crawler report in your AdSense account, and we can help you figure out exactly what to do.

Different Types of Crawler Errors

1) Page Not Found (404 error)

Page not found (404 error): AdSense crawler encounters a 404 error while accessing a page. The website owner can check if the page exists and if the URL is correct. If the page doesn’t exist, the website owner can either redirect to a relevant page or remove the broken link.

A temporary URL can cause this as well. There is a fantastic tool that can help you find these pages to avoid this type of error: Webmaster URL parameter tool.

2) Robots.txt file blocked

The robots.txt file is a powerful tool for controlling how search engine bots and other crawlers access your website. Understanding its nuances can significantly impact your AdSense performance. Here’s a closer look:

Wildcards and Pattern Matching:

- The Asterisk (*) Wildcard: This wildcard represents any sequence of characters. It’s incredibly useful for blocking or allowing access to a group of similar pages or files.

- Example:

Disallow: /images/*blocks access to all files within the/images/directory.

- Example:

- Dollar Sign ($) End-of-Line Matching: This matches the end of a URL.

- Example:

Disallow: /*.pdf$blocks access to all PDFs on your site.

- Example:

The AdSense crawler can’t access the website due to the website’s robots.txt file blocking Googlebot. The website owner can check the robots.txt file and remove any restrictions on Googlebot.

To resolve this, remove these two lines from your robots.txt file to grant Google crawler access:

User-agent: Mediapartners-Google

Disallow: /Disallow vs. Noindex:

- Disallow: This directive tells crawlers not to access a specific URL or section of your website. This means the page won’t be crawled or indexed, and therefore won’t be eligible to display AdSense ads.

- Noindex: This directive, placed within the

<head>section of a page’s HTML, tells search engines to not include the page in their index, even if they crawl it. This means the page might still be crawled by AdSense, potentially allowing ads to appear, but it won’t show up in search results.Implications for AdSense:

- If you want to completely prevent AdSense from accessing a page, use

Disallowin your robots.txt.- If you want to allow AdSense to crawl a page for potential ad serving but prevent it from appearing in search results, use the

noindexmeta tag.User-agent Specific Rules:

You can create specific rules for different crawlers by using the

User-agentdirective. This allows you to fine-tune access for different bots.Example:

User-agent: * Disallow: /private/

User-agent: Mediapartners-Google Allow: /

User-agent: Googlebot-Image Disallow: /images/`

In this example:

- The first rule blocks all crawlers from the

/private/directory.- The second rule specifically allows the AdSense crawler (

Mediapartners-Google) access to the entire site.- The third rule blocks Google’s image bot (

Googlebot-Image) from accessing the/images/directory.By mastering these robots.txt techniques, you can ensure that AdSense crawlers have optimal access to your website’s content, leading to better ad targeting and increased revenue.

3) Content Behind a Login

Many websites require login information to get premium access to the site’s main content. It usually means that a crawler login hasn’t been set up for that premium content.

As with error #2 above, Google will not be able to access this page, and Google ads will not be served.

Imagine if you have thousands of premium users, how many lost impressions do you think will that be?

This is, thankfully very simple to resolve. Go to your Google AdSense login page; under Access and Authorization in Settings, go to the crawler access and provide login details for the crawler to use to access your site.

You may want to check out Google’s step-by-step guide to displaying ads on login-protected pages.

4) You Have Ad Crawler Errors for a Site I Don’t Manage

This error means that someone is using your ad code on a different site without your permission. The impressions and clicks will still be counted but will not payout. Therefore, you will not be earning as this is not authorized.

If this ever happens, set your AdSense account to “Only allow certain sites to display ads for my account.” This is an option available under Settings > Accounts and Authorization. Expect changes to happen in 48 hours.

Crawler issues are remarkably straightforward and easy to fix, and you don’t even need a Swedish assembly guide.

5) Server errors (5xx error)

AdSense crawler encounters server errors while accessing a page. The website owner can check the server logs for errors and contact their web hosting provider to fix the server issue.

6)Page Speed and Rendering:

Page speed is crucial for both user experience and AdSense performance. Google’s Core Web Vitals, a set of metrics that measure user experience, are also important for AdSense.

- Impact of slow rendering: Slow loading times can prevent AdSense crawlers from fully accessing and understanding your content. This can lead to fewer ad impressions or less relevant ads.

- Optimization tips:

- Minimize render-blocking resources (CSS, JavaScript).

- Optimize images (compress, use appropriate formats).

- Use browser caching.

- Consider a Content Delivery Network (CDN).

Dynamic Rendering:

Dynamic rendering is a technique where you serve a simplified, static version of your website to crawlers like the AdSense bot (Mediapartners-Google), while still presenting the full, dynamic version to regular users. This ensures that the crawler can easily understand and index your content, even if it’s heavily reliant on JavaScript.

- How it works: Your server detects the user-agent of the incoming request. If it’s a crawler, it serves a pre-rendered, static HTML version of the page. If it’s a regular user, it serves the dynamic, JavaScript-powered version.

- Benefits for AdSense: Improved crawling and indexing, leading to better ad targeting and potentially higher revenue.

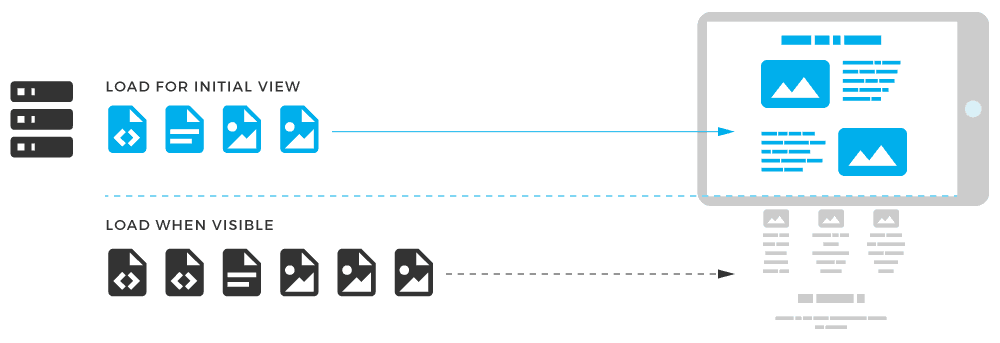

Lazy Loading:

Lazy loading is a technique where images and other elements are only loaded when they are about to become visible on the user’s screen. While this can improve initial page load time, it can sometimes interfere with AdSense.

- The problem: If AdSense crawlers don’t see the lazy-loaded content, they may not be able to analyze the page and serve relevant ads properly.

- Solutions:

- Ensure lazy-loaded content is still accessible to crawlers (e.g., by using structured data or specific crawler-friendly lazy-loading techniques).

- Prioritize loading ads or content near the top of the page.

- Consider using a “pre-lazy load” technique to load important elements (including ad units) before lazy loading kicks in.

7) No Content

AdSense crawler may not be able to find any relevant content on a page. The website owner can ensure that each page has sufficient content that is relevant to the topic and includes targeted keywords.

8) Invalid URL

AdSense crawler may encounter an invalid URL that does not conform to the URL structure. The website owner can ensure that all URLs are properly formatted and do not contain any special characters or spaces.

Fastest Ways to Fix AdSense Crawler Errors

As a website owner and AdSense publisher, there are several solutions you can do on your own to fix AdSense crawler errors:

- Check your robots.txt file: Ensure that your robots.txt file is not blocking the AdSense crawler. You can use the robots.txt Tester in Google Search Console to verify this.

- Fix 404 errors: If AdSense crawler encounters a 404 error while accessing a page, check if the page exists and if the URL is correct. If the page doesn’t exist, you can either redirect to a relevant page or remove the broken link.

- Fix server errors: If AdSense crawler encounters server errors while accessing a page, check your server logs for errors and contact your web hosting provider to fix the server issue.

- Optimize your website’s performance: Ensure that your website loads quickly by minimizing the use of large images and scripts, using caching, and using a content delivery network (CDN).

- Create sufficient content: Ensure that each page on your website has sufficient content that is relevant to the topic and includes targeted keywords.

- Ensure that your URLs are properly formatted: Check that all your URLs are properly formatted and do not contain any special characters or spaces.

- Ads near High-Engagement Areas: Consider placing ads near areas of high user engagement, such as the end of an article or near a call-to-action.

- Ads Above the Fold: Limit the number of ads “above the fold” (the area of the page visible without scrolling). Too many ads here can overwhelm users and disrupt their initial impression of your site.

- Less is Often More in Ad Density: Avoid overwhelming users with too many ads. A cluttered page can lead to a negative user experience and may even affect AdSense crawling.

Conclusion

Were you able to find the solution to your AdSense crawler issues? If not, our expert team could take a look and provide a definitive solution.

Sign up for a Starter account at MonetizeMore today and let us fix your AdSense crawler issues!

Additional FAQ

How do I fix my AdSense crawler errors?

There are many different reasons for AdSense crawler errors. Some include Robots being denied, 404 errors, and more. We discuss each type of error and how to fix them in our blog post.

How do I stop AdSense irrelevant ads?

To block irrelevant or unwanted ads, you can block specific advertisers in your AdSense dashboard or ad categories. Google provides more information on blocking options in AdSense here: https://ift.tt/PT1qwtK.

Related Reads:

source https://www.monetizemore.com/blog/common-adsense-crawler-issues-fix/

0 Comments