The emergence of ChatGPT and similar AI technologies brings both opportunities and challenges. As a publisher, it is crucial to examine the potential impact of ChatGPT on automated/bot traffic and devise effective strategies to mitigate its negative consequences. This article explores the implications of ChatGPT on the generation of bot traffic, content scraping, and intellectual property infringement and provides actionable initiatives to address these concerns.

1. Facilitation of Bot Traffic Generation Through Code Creation

ChatGPT’s ability to generate code is an invaluable tool for education and troubleshooting. However, malicious actors may exploit this capability to produce code that targets websites with automated bot traffic. Ad-based websites, which depend on authentic user engagement for revenue generation, are particularly vulnerable.

Since advertisers typically pay for impressions and clicks based on user interactions, bot-generated engagement can artificially inflate these metrics. As a result, advertisers may pay for interactions not initiated by real users but by bots.

This malpractice not only leads to financial losses for advertisers but also erodes trust in online advertising platforms. Advertisers expect their investments to reach and engage real human audiences. When their ads are displayed to bot traffic instead, it undermines the effectiveness and value of their advertising campaigns. Such incidents can damage the reputation of the advertising platform and the publishers associated with it, as advertisers become wary of allocating their budgets to platforms susceptible to bot fraud.

Publishers must know this potential impact and take appropriate measures to mitigate the risks. This includes implementing robust fraud detection and prevention mechanisms, partnering with reputable ad networks that employ sophisticated bot detection algorithms, and monitoring traffic patterns to identify anomalies that may indicate bot activity. By actively addressing the issue of bot traffic, publishers can maintain the integrity of their engagement metrics and build trust with advertisers.

2. Intensification of Content Scraping

ChatGPT and similar systems can execute various tasks, including web scraping, which entails extracting data from websites for diverse objectives. Regrettably, the use of ChatGPT-like systems might lead to a surge in content scraping. This activity can involve either the authorized or unauthorized duplication of online content, which is then repurposed or monetized. Content scraping may also place an undue burden on the servers of the targeted websites, affecting their performance and availability for legitimate users.

When scraped content is monetized elsewhere, publishers are deprived of the traffic and engagement that their original content would have generated. Moreover, content scraping can place an undue burden on the servers of targeted websites. When numerous scraping requests are made, it can cause increased server load and bandwidth usage, potentially leading to degraded performance or even downtime. Legitimate users may experience slower page loading times or difficulties accessing the website, affecting their overall user experience.

From a legal perspective, content scraping raises concerns regarding copyright infringement and intellectual property rights. Unauthorized scraping and reproduction of content without proper attribution or permission may violate copyright laws. Publishers have the right to protect their original works and control how they are used and distributed.

To address the intensification of content scraping, publishers can employ various measures. These may include implementing technological safeguards such as bot detection algorithms, CAPTCHA systems, or rate limiting mechanisms to detect and mitigate scraping attempts. Additionally, publishers can leverage legal avenues to protect their content, including copyright registration, issuing takedown notices to infringing parties, and pursuing legal action against repeated offenders.

It is essential for publishers to stay vigilant and actively monitor their content for unauthorized duplication. By taking proactive steps to safeguard their intellectual property and mitigate the impact of content scraping, publishers can maintain control over their content, protect their revenue streams, and uphold their legal rights.

3. Intellectual Property and Copyright Infringement Concerns

An increase in content scraping, aided by ChatGPT and similar technologies, could result in widespread intellectual property and copyright infringement. This escalating trend threatens the ability of original content creators to derive appropriate benefits from their work.

Content scraping involves the unauthorized extraction and republishing of online content, often without providing proper attribution or seeking permission from the original creators. This practice undermines the fundamental principles of intellectual property, where creators should have the right to control the distribution, usage, and monetization of their works.

When content is scraped and republished without consent, it diminishes the revenue potential for the original creators. They lose out on opportunities to monetize their content through legitimate channels, such as advertising, subscriptions, or licensing agreements. Additionally, the lack of proper attribution deprives them of the recognition and credibility they deserve for their creative efforts.

Furthermore, content scraping can lead to a diluted online environment where duplicated content floods various platforms. This not only creates confusion for users seeking reliable and authentic sources but also hampers the growth and diversity of original content. The prevalence of scraped content diminishes the incentive for creators to invest their time, resources, and talent into producing high-quality, original work.

From a legal standpoint, content scraping without permission often infringes upon copyright laws. These laws grant exclusive rights to content creators, including the right to reproduce, distribute, display, and adapt their works. Unauthorized scraping and republishing of copyrighted material infringe upon these rights, potentially subjecting the perpetrators to legal consequences.

To address these concerns, content creators and publishers must remain vigilant in monitoring and protecting their intellectual property. They can employ various strategies to combat content scraping, including implementing technical measures to detect and prevent scraping attempts, watermarking or digitally fingerprinting their content, and pursuing legal actions against repeat offenders.

Mitigating Measures

Given these potential impacts, it is imperative to develop strategies to mitigate the misuse of ChatGPT and similar technologies. These strategies include implementing stricter bot detection mechanisms, developing tools to assess the protection levels of websites against content scrapers, educating publishers on the potential risks, and establishing legal frameworks that address the unauthorized use of such technologies.

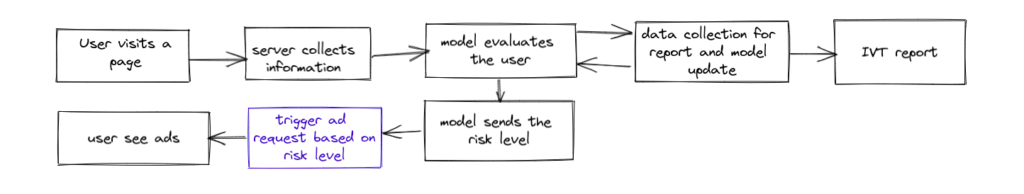

To mitigate the potential negative impacts of ChatGPT and similar technologies, publishers can benefit from utilizing advanced bot detection solutions like Traffic Cop. Traffic Cop, an award-winning bot-blocking solution offered by MonetizeMore, provides an effective defense against bot-generated traffic, safeguarding the integrity of engagement metrics and protecting advertisers’ investments.

With its advanced algorithms and machine learning capabilities, Traffic Cop is designed to detect and block malicious bots, click farms, and other fraudulent activities. By integrating Traffic Cop into their websites, publishers can ensure that their ad-based platforms are shielded from the adverse effects of bot traffic, such as inflated engagement metrics, inaccurate performance metrics, and wasted ad spend.

This comprehensive bot blocking solution not only enhances the accuracy and reliability of engagement metrics but also helps maintain advertisers’ trust in the platform. By proactively blocking bots, Traffic Cop ensures that advertisers’ budgets are allocated towards genuine user engagement, resulting in increased transparency and a higher return on investment.

Moreover, Traffic Cop provides publishers with detailed insights and reports on bot traffic, allowing them to analyze patterns, understand the scale of the bot problem, and take necessary measures to optimize their ad performance and user experience further.

By leveraging Traffic Cop, publishers can confidently navigate the challenges posed by malicious bot activities, ensuring a more secure and trustworthy advertising environment for both publishers and advertisers. It empowers publishers to take control of their websites, protect their revenue streams, and strengthen their relationships with advertisers.

source https://www.monetizemore.com/blog/how-chatgpt-impacts-automated-bot-traffic/

0 Comments